Emulab Storage

IMPORTANT NOTE!

Emulab's Storage subsystem is under active development, so things described in this document may change - check back for updates.

NEW: Persistent blockstores have been implemented! See the Persistence section for details and Persistent Blockstore Examples for example use.

Also be sure to read the Implementation Issues and Caveats section!

Emulab Storage at a Glance

Even without asking for them, Emulab provides you with some storage resources both inside and outside of experiments. Across and external to experiments, there are the shared /proj, /groups, /users, /scratch, and /share file systems. These are available on the 'users' server at the Emulab site you're on, as well as the nodes in your experiments (via a network filesystem). Other documentation describes why each of these areas exists, and what you can do with them.

Each node in an experiment also has its own storage resources, in the form of locally attached disks. Emulab's OS image management subsystem makes use of storage resources on each node, claiming space on the primary 'system' volume (what the BIOS would by default identify as the boot volume). In most cases, less than 100% of local node storage is consumed by the OS image, and what is left is free for use by experimenters. Along with this extra system disk space, any other supplementary disks present on the node are available for storage. Emulab also provides a tool called mkextrafs (documented here) to make the unallocated local space on a node available for use. You can manage copying/synchronizing files local to your nodes with the loghole utility.

As of Spring 2013, Emulab has introduced a framework that makes storage a first class notion. Users may specify storage objects in their NS files and attach them to nodes in their experiment. These objects can be composed in various ways from underlying storage elements. The framework has been designed with flexible composition in mind, ultimately bounded by what the volume management and filesystem constructs on modern operating systems allow. The implementation is taking a pragmatic direction, leaving some of the more esoteric and complex functionality out until and unless it is deemed useful. Plenty of utility remains, however, allowing for various storage layouts involving node-local disk space, as well as arbitrarily many remote storage elements (iSCSI block devices). Note that not all resource combinations are valid or available - Emulab will respond to such constructions with errors or a failure to map resources.

Storage Object Overview

Emulab storage objects can be distilled into elements and compounds. Storage elements are resources that, from a particular node's perspective, are treated as a single unit. These elements may be local disks (SSD, magnetic spinning, USB flash, etc.), or remote resources (iSCSI block devices). Whatever they are, the node consuming these elements didn't compose them, and so can't break them down further. However, it may use these elements in it's own storage compositions (e.g. volumes with partitions and filesystems). Of course, not all compositions are possible given operating system and physical resource constraints. This document gives experimenters guidance on what they can put together, and how.

Classes of Storage

An object's class is a coarse-grained grouping, such as local block or shared remote storage. Storage objects are further identified and defined by their attributes, which are constrained to what is available in their class. For example, if an object is in the "SAN" class, then the available bus protocols may be "iSCSI" and "FibreChannel." For the "local" class, storage objects have a "placement" attribute to help direct where to allocate the object. Experimenters can use these storage class attributes to fine tune their requests and compositions.

Storage Origins

Emulab storage objects originate from a few different sources. Two storage origins are currently supported: shared-host and node-local.

- Shared-Host

Shared storage hosts are infrastructure nodes that export storage objects to multiple consumers across projects, groups, experiments, etc. Think of them as storage arrays or servers that are not themselves in your experiment, but from which storage objects can be allocated to your experiment. In our current implementation, performance for shared storage objects is effectively best effort. The mapping software attempts to spread the allocations out, but true isolation is not present. Emulab does not currently allocate bandwidth for individual objects - when you use them, you are in the fray with the rest. Performance artifacts are a distinct possibility. An advantage of allocating storage objects from shared hosts is that there is generally more space available.

- Node-Local

- Future Origins

Persistence

Emulab storage objects also have two possible lifetimes: ephemeral or persistent.

- Ephemeral

Ephemeral blockstores exist only for the duration of an experiment swapin. If you associate an ephemeral blockstore with an experiment via the NS file (see the examples below), then every time you swap that experiment in you will get a new instance of the blockstore. Ephemeral blockstores are appropriate for experiments that require a large amount of temporary storage space, typically because they produce a large amount of data. They may be less well suited to experiments that consume a large data set, because that data set must be loaded on to the node after each swapin (and possibly saved off again prior to swapout if the data set is mutated).

In general, the size of an ephemeral blockstore is limited only by the size of the node-local disks (typically 10s to 100s of GB) or by the available space in the designated ephemeral blockstore areas of the shared storage hosts (typically 1s to 10s of TB).

Note that currently, node-local blockstores are always ephemeral. In the future, we might add a mechanism to support "persistent" node-local blockstores by implicitly loading and unloading the data at each swapin and swapout.

- Persistent

Persistent blockstores endure beyond an experiment swapin and even the lifetime of an individual experiment. Persistent blockstores are associated with projects and can be used by any experiment within a project, though only by one experiment at a time. Persistent blockstores are appropriate for evolving data sets (e.g., a large database), or data sets that are used by many different experiments (e.g., VM images or a collection of Linux source trees). It is important to note that since these blockstores are associated with projects and not users, you cannot use a persistent blockstore to carry around your "personal stuff", both because you cannot prevent access by other people in the project and because you could not access the blockstore from an experiment in another project you are a member of. We have designed, but not yet implemented, a permission mechanism that will make this possible in the future.

The lifetime of a persistent blockstore has several stages. First a blockstore must be created with a specific type (see below), size, and end (expiration) time. A blockstore can be created by any user in a project that has the ability to create experiments ("local-root" privilege). Once created, a blockstore is in the unapproved state, and must be "approved" before it can be used. Within certain limits of size and duration, persistent blockstores are automatically approved at creation time. All others require explicit approval by a testbed administrator. After approval, the blockstore is considered valid and can be used in experiments. Eventually, due either to reaching the explicit end date or because it has been idle too long, a persistent blockstore will be deemed "expired" and will enter the grace state. During this grace period, the blockstore will continue to be accessible (though possibly only in a read-only fashion) to allow a user to migrate the data to a new location outside of Emulab. Alternatively, a user may be allowed to extend the lifetime of the blockstore by a fixed amount, returning it to the valid state. At the end of the grace period, the dataset will automatically either be destroyed or made inaccessible (placed into the locked state), depending on the system policy.

There are two types of persistent blockstores intended to address two broad usage patterns. So-called "long-term datasets" (type: ltdataset) are what you would think of as "persistent": they stick around for as long as they are useful; i.e., they expire only after having been unused for a long time rather than at a fixed date, and then are locked down rather than automatically destroyed. Every project has a quota determining how much total space can be allocated to its long-term datasets. Thus, the only limit on the size of such a dataset is the amount of space remaining in the quota, and project members can negotiate among themselves as to how the space is used. "Short-term datasets" (type: stdataset) are for situations where you want to run a series of experiments over a short time period (days to weeks) using the same large data set but where an ephemeral blockstore may not be practical (e.g., it takes hours to initialize the data set or you want to run experiment instances on several different machine types). Short-term datasets are not subject to the per-project quota (their size is instead limited by a system-wide maximum value), are limited to a relatively short and fixed lifespan (again by a system-wide maximum value), and are automatically destroyed at the end of that time period.

Filesystem Support

Ephemeral Blockstores

Emulab provides a high level way to ask for filesystem creation on top of block storage. By declaring a mount point attribute for your storage object, Emulab will infer that you want a filesystem created on that object (mounted where you specified!). There is currently no way to ask for a specific filesystem or modify its attributes via this mechanism. What you get will be a "sensible" filesystem for the OS and storage class in question, with reasonable default settings. For example, on an Ubuntu Linux image, you'll get ext4. On FreeBSD, you'll get UFS2. For Node-Local storage, the Emulab client-side will use logical volume management (lvm2 on Linux; zfs on FreeBSD) to aggregate space as necessary to fulfill your request. The full details are shown below in the "Asking for a filesystem" section. For remote (SAN) space, you can only specify a mount point if there is only a single node connected to it; more than one connected node implies a more sophisticated setup that would involve a cluster-aware filesystem (or something similar). We assume you would want to setup such things yourself.

Persistent Blockstores

In the current implementation, Emulab will not automatically create a filesystem on a persistent blockstore on first use as it does for ephemeral blockstores. Instead, you must either use the -f option to createdataset or create the filesystem manually the first time you use the blockstore. Once you have a filesystem on a blockstore, you can use the set-mountpoint method in your experiment description to have it automatically mounted at swapin time.

createdataset (and the client-side automatic mounting code) currently supports the following filesystems: ext2, ext3, ext4, and ufs. If you need a different filesystem, or want a volume manager such as LVM or ZFS, then you will have to handle creation and management yourself; i.e., do not use 'createdataset -f' or set-mountpoint. When creating a filesystem on a persistent blockstore, keep in mind that filesystems are generally operating system specific. If you are planning on using only recent Linux images on your nodes, then ext3 or ext4 is your best bet. For FreeBSD only, use ufs of course. If you want to use your blockstore with either Linux or FreeBSD, use ext3.

See the examples section below for a concrete example of using a filesystem.

Ephemeral Blockstore Examples

Below are a couple of examples depicting the use of local and remote storage to help tie together the concepts explained previously.

Local Storage

Local block storage objects replace the mkextrafs mechanism previously employed by users to setup extra space on their local nodes. Here we'll show a sample setup of two nodes, one using free space on the system volume, and the other using space on the non-system disk area. We'll connect the nodes by a simple link just for fun.

# Input Emulab definitions source tb_compat.tcl # Create simulator object set ns [new Simulator] # # Define the nodes # set node1 [$ns Node] tb-set-node-os $node1 "UBUNTU16-64-STD" set node2 [$ns Node] tb-set-node-os $node2 "FBSD103-64-STD" # Sample link - because we are running some kind of network experiment, right? set link1 [$ns duplex-link $node1 $node2 100Mbps 5ms DropTail] # # Define and bind the blockstores (local storage) # # The "bs1" storage object specification here is the equivalent of doing "mkextrafs /mnt/extra" on node1. set bs1 [$ns blockstore] $bs1 set-class "local" $bs1 set-placement "sysvol" $bs1 set-mount-point "/mnt/extra" $bs1 set-node $node1 set bs2 [$ns blockstore] $bs2 set-class "local" $bs2 set-size "100GB" $bs2 set-placement "nonsysvol" $bs2 set-mount-point "/mnt/extra" $bs2 set-node $node2 # Static routing (though it doesn't really matter for this simple topology $ns rtproto Static # Go! $ns run

The node declarations at the top are done as per usual. Next come the blockstores declarations. You'll notice that we've told Emulab a few things about each by setting attributes. The most important bit here is that we've specified the class to be local for the blockstores in this example. For the first blockstore (bs1), we told Emulab to bind it to node1 and have it span the rest of the space on the system volume (sysvol placement, no size specified). We've also asked that bs1 be mounted on /mnt/extra; this implies to Emulab that a local filesystem should be created on top of this blockstore. Since the associated node's OS is UBUNTU16-64-STD, the client-side code there will create an ext4 filesystem.

For blockstore bs2, we've asked for a local blockstore to be created on node2. This time we have indicated that it be placed on non-system disk space. The size is explicitly set to 100GB, and again Emulab is informed (with set-mount-point) that a filesystem should be created and mounted at /mnt/extra. Since node2's OS is FBSD103-64-STD, the client-side will setup a ZFS filesystem, created from a "zpool" that spans all non-system disks. Note that node2, since it is the host of bs2, will only get allocated on a node type that has at least two disks since the nonsysvol placement requires that.

Finally note that these local blockstores are only available to the individual nodes they are bound to: bs1 is available on node1, and bs2 is available on node2. However, stepping outside of the Emulab mechanisms, one could setup NFS shares or the like to make the space available to the other node.

Note: These spaces are ephemeral! They will go away (along with their contents) when the experiment is swapped out! It is up to the experimenter to copy out or otherwise actively save off what s/he wants to keep.

Remote Storage

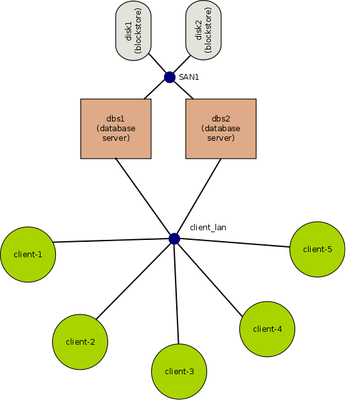

This example walks through an experiment employing Shared Host storage objects. Assume that we are interested in running an experiment involving a High Availability database. Our setup will include a handful of client nodes connected to two database server nodes via a LAN. The two database servers will consume a small number of shared storage objects where they will store the database files. The diagram below depicts this setup.

Assume that we have already built up and captured an OS image with all of the required database software, called UBUNTU16-64-MYDB. This image should have been derived from an existing Emulab image that supports the storage object bus protocol we are interested in (in this case, iSCSI block devices).

The NS file representing this experiment setup looks like this:

# Input Emulab definitions

source tb_compat.tcl

# Create the simulator object

set ns [new Simulator]

#

# *** Define the storage objects

#

set disk1 [$ns blockstore]

$disk1 set-class "SAN"

$disk1 set-size "50GiB"

$disk1 set-protocol "iSCSI"

set disk2 [$ns blockstore]

$disk2 set-class "SAN"

$disk2 set-size "25GiB"

$disk2 set-protocol "iSCSI"

#

# Define the database servers

#

set dbs1 [$ns Node]

tb-set-node-os $dbs1 "UBUNTU16-64-MYDB"

set dbs2 [$ns Node]

tb-set-node-os $dbs1 "UBUNTU16-64-MYDB"

#

# Connect the storage objects and servers together.

#

set san1 [$ns make-san "$dbs1 $dbs2 $disk1 $disk2"]

#

# Create the clients. Use a loop here to make the number flexible.

#

set num_clients 5

for {set i 1} {$i < $num_clients} {incr $i} {

# Create the node

set client($i) [$ns Node]

# Set the OS

tb-set-node-os $client($i) "UBUNTU16-CLIENT"

# Add to client LAN string

append $client_lan_str "$client(${i}) "

}

# Now create the client lan. Tack the DB servers on to the end of the list of clients previously created in the loop.

set client_lan [$ns make-lan "$client_lan_str $dbs1 $dbs2" 1Gb 0ms]

# Use static IP routing

$ns rtproto Static

# Execute!

$ns run

Of note in this NS file:

- Startup command / event sequences omitted

To keep things clean and focused on the storage object aspects of this example, we elide some other usual directives for automating experiments, such as startup commands and event sequences.

- blockstore objects and class

We have indicated to Emulab that both blockstore objects are of class SAN. A "class" declaration is required, as is "size" (for SAN volumes). Everything else is optional. Had we not specified "iSCSI" as the bus protocol, Emulab would have been free to use any compatible and available transport (we only have iSCSI support right now though).

- "GiB" in the size specification

Emulab automatically converts all size specifications to mebibytes (2^20 bytes). base-10 units can be used, but they will be rounded down to the nearest mebibyte.

- The "san1" SAN specification

Notice how the construction of a "san" object looks similar to that of a "lan" object? "make-san" is just syntactic sugar that creates a lan with the members specified, but nullifies link/lan shaping (no latency, loss, or artificial bandwidth caps). Another noteworthy thing here is that we put the blockstore objects directly into this SAN without fixing/binding them to any particular host. This tells Emulab that these remote blockstores should be allocated from shared (infrastructure) storage hosts. The parser and mapper do some dirty work under the hood to make this happen. A side effect you'll notice after swapping in is that there are these "blockstore-vm" entries among your experimental nodes. These are simply encapsulations the system uses to represent the storage objects.

A word of caution: We have explicitly setup remote storage objects (iSCSI blockstores) that are shared among hosts. Trying to setup such a configuration with two or more nodes and using a standard filesystem, mounted on all of them at the same time, would have catastrophic results. This is because most filesystem implementations assume they have the block device to themselves. Multiple reader/writer applications and filesystems exist for this sort of shared scenario, but a discussion of them is out of scope for this document.

Another word of caution: Blockstores from shared hosts are ephemeral! Their contents will go away when your experiment is swapped out or terminated. Offering persistent storage is on our todo list.

Persistent Blockstore Examples

For the most part, using a persistent blockstore is essentially the same as using an ephemerial blockstore with the exception that they must be explicitly created rather than just coming into existence when an experiment is swapped in. Currently, this is done using a CLI on the Emulab 'users' node. Eventually there will be a tab or menu item under the Project Information web page, but for now it is all old school.

NOTE: In the following, we use the terms lease, dataset, and persistent blockstore interchangeably unless otherwise noted. dataset is just an alias for persistent blockstore, while lease refers to the generic mechanism underlying the implementation of datasets. Don't think about it too much, just go with it!

Creating a Persistent Blockstore

Login to the users node (e.g., users.emulab.net) and use the createdataset command to create a short- or long-term dataset of the appropriate size with an optional expiration date. Optionally, you can also pre-create a filesystem on the blockstore.

Creating a long-term dataset:

To create a 100 GiB (gibibyte == 1024 * 1024 * 1024) long-term dataset named "images" in the "testbed" project with an ext4 filesystem, you would do:

/usr/testbed/bin/createdataset -t ltdataset -s 102400 -f ext4 testbed/images

for which you should get a response:

WARNING: FS creation can take 5 minutes or longer, be patient! Created lease 'testbed/images' for long-term dataset, never expires.

Note that without specifying an explicit expiration date, you get the default for long-term datasets at your site. For the Utah Emulab, the default is 'never' (don't get too excited though, as the dataset lifetime is still subject to an idle-time expiration!) Note also the warning about filesystem creation time. If you create a filesystem on a 1TiB blockstore, it can take on the order of 5 minutes for ext2 and ext3 (it takes only seconds for ext4 and ufs). If you interrupt the creation during this time, you will get an ugly python backtrace, but the the blockstore creation will continue. However, you will not be able to use the blockstore until creation completes.

If the result of the command is instead:

*** createdataset: Allocation of 102400 would put testbed over quota (100000).

then you will have to negotiate with testbed admins for a larger quota.

Creating a short-term dataset:

To create a 500GiB short-term dataset in the "testbed" project with an ext3 filesystem (suitable for use both under Linux and FreeBSD):

/usr/testbed/bin/createdataset -t stdataset -s 512000 -f ext3 testbed/tmp

which should return:

WARNING: FS creation can take 5 minutes or longer, be patient! Created lease 'testbed/tmp' for short-term dataset, expires on Wed Jan 29 13:15:39 2014.

Since you did not specify an explicit expiration, it will use the default for a short-term dataset. If you try to create a dataset which is too large or exceeds the maximum lifetime you would get one of:

Requested size (1512000) is larger than allowed by default (1048576). Try again with '-U' option and request special approval by testbed-ops.

Expiration is beyond the maximum allowed by default (Mon Jan 1 00:00:00 2035 > Wed Jan 29 13:19:27 2014). Try again with '-U' to request special approval by testbed-ops.

In which case you can follow the advise and use the -U ("create unapproved") option and see if the testbed administrators approve your extraordinary request. You should receive email within a day or so telling you whether it has been approved or not.

Determining the site-specific size and duration limits for datasets:

Use the showdataset command:

/usr/testbed/bin/showdataset -D

which on the Utah Emulab will return the output:

stdataset: Maximum size: 1.00 TiB (1099511627776 bytes). Expiration: after a lease-specific time period (maximum of 7.0 days from creation). Disposition: destroyed after expiration plus up to 2 1.0 day extensions. ltdataset: Maximum size: determined by project quota. Expiration: after 180.0 days idle. Disposition: locked-down after expiration.

Using a Persistent Blockstore

Assuming you have successfully run createdataset, then the storage has been allocated and can now be mapped into an experiment. This is done by creating a blockstore object in your NS file and then using the set-node and set-lease methods to associate a node and dataset:

set node [$ns node] ... set idisk [$ns blockstore] $idisk set-lease "testbed/images" $idisk set-node $node

A complete example is:

# Input Emulab definitions source tb_compat.tcl # Create the simulator object set ns [new Simulator] # Our node set node [$ns node] # # Node type (optional). # As there is no way to explicitly specify a bandwidth between the SAN-server # and your node, you may want to specify a node type with at least a 1Gb # Ethernet link. N.B. this does NOT mean you will get 1Gb throughput since # the SAN-server links are shared by all experiments. # # UTAH SPECIFIC choices (other sites will be different): # 'pc3000' you will probably get 1Gb, but you might get 100Mb # 'd710' 1Gb

# 'd430' 10Gb, this is the preferred node type to use # 'd820' 10Gb, please do not use these unless you really need! # #tb-set-hardware $node pc3000 #tb-set-hardware $node d710

#tb-set-hardware #node d430 #tb-set-hardware $node d820 # # Node OS (optional). # Not all images may have the client-side support for blockstores. # You should stick with something recent. # # UTAH SPECIFIC choices (other sites will be different): # 'UBUNTU16-64-STD' Most popular 64-bit Linux # 'FBSD103-64-STD' 64-bit FreeBSD 10.3 # #tb-set-node-os $node UBUNTU16-64-STD #tb-set-node-os $node FBSD103-64-STD # # Attach a persistent dataset. # Do not specify a mount-point until after you have created a filesystem! # Specify readonly to prevent writing to the dataset. # set idisk [$ns blockstore] $idisk set-lease "testbed/images" $idisk set-node $node #$idisk set-mount-point "/mnt/images" #$idisk set-readonly 0 # Use static IP routing $ns rtproto Static # Execute! $ns run

Recall that you should only use the set-mount-point method if you have already created a filesystem on the blockstore. If you are watching the console during the boot of the node, you will see a message like:

Checking Testbed storage configuration ... Checking 'disk0'... disk0: local disk at /dev/sda exists Checking 'disk1'... disk1: local disk at /dev/sdb exists Checking 'idisk'... Configuring 'idisk'... idisk: persistent iSCSI node attached as /dev/sdc

The name of the persistent block will be the same as specified in the NS file. In this example it is 'idisk' and is accessible as /dev/sdc on Linux. If you are not watching the console, or you need to programatically determine the device, then you can look at the file /var/emulab/boot/storagemap which is formatted like:

disk0 /dev/sda disk1 /dev/sdb idisk /dev/sdc

Manually creating a filesystem

If this is the first use of the blockstore and you are planning to put a filesystem on the blockstore yourself, it is important that the filesystem be placed on the raw device and not on a partition of the device. For this reason, you cannot use the Emulab mkextrafs script which attempts to first create an MBR on any disk and put the filesystem in a partition. You should instead login to the node and (on Linux):

echo y | sudo mkfs -t ext4 /dev/sdc sudo mkdir /mnt/images sudo mount /dev/sdc /mnt/images

replacing /dev/sdc with whatever device was used (as determined by looking at the storagemap). At this point, the new storage is available under /mnt/image-disk and you can load it up with whatever you want and continue with your experiment. When you are finished, unmount the filesystem:

sudo umount /mnt/images

and swapout the experiment. If you are using one of the supported filesystem type, all future uses of the blockstore can then use set-mount-point:

$idisk set-mount-point "/mnt/images"

where idisk should be replaced by the name of your blockstore, and /mnt/images by the location where you want to mount the blockstore. When you specify the mount point, Emulab will automatically mount it at boot time with a message like:

... Configuring 'idisk'... idisk: persistent iSCSI node attached as /dev/sdc, ext4 FS mounted RW on /mnt/images

Emulab will likewise automatically unmount the filesystem when shutting down or swapping out the experiment.

If you are not using a supported filesystem, then you should never use set-mount-point, as the boot-time blockstore initialization on the node will fail. Instead, you will have to manage mounts yourself, hooking into the startup and shutdown process as appropriate for the OS.

Important note: you cannot just add your filesystem mount to /etc/fstab, as the iSCSI system has not been initialized at the time the default mounts are done. You must handle mounting after the Emulab scripts have run, and unmounting before Emulab shuts down.

Compatible OS Images

In time most supported Operating System images will have blockstore support. For now, please choose (or derive your own) from the following list:

| Image Name | Local Blockstore Support | Remote Blockstore Support | Persistent Blockstore Support |

|---|---|---|---|

| FEDORA15-64-STD | yes | yes | no |

| UBUNTU14-64-STD (Linux 3.x kernel) | yes | yes | yes |

| UBUNTU16-64-STD (Linux 4.x kernel) | yes | yes | yes |

| CENTOS7-64-STD | yes | yes | yes |

| FBSD92-64-STD | yes | yes, but slow/flakey | yes, but slow/flakey |

| FBSD102-64-STD | yes | yes | yes |

| FBSD103-64-STD | yes | yes | yes |

| FBSD110-64-STD | yes | yes | yes |

Note that Emulab's mapper will explicitly check for the requisite OS features (remote and/or local blockstore support), and fail if they are not present.

Note also that, as indicated, iSCSI initiator support on older FreeBSD releases is primitive. Starting with 10.0, there is a new iSCSI initiator implementation.

Reference

This section details the NS syntax and directives specific to storage objects in Emulab. Some usage hints and caveats are presented inline.

Creating blockstore objects

set myblocks [$ns blockstore]

This directive tells the parser to create a blockstore object, and assigns it the name "myblocks". Without further calls to the "set-*" functions, this object is vanilla default, lacking even a class. It would not map as is. For ephemeral blockstores, you need to at least give it a class and bind it to a link or lan (remote storage), or to a specific host (local storage). For persistent blockstores, it needs to be associated with a dataset lease.

$myblocks set-class "(SAN|local)"

The "set-class" function is used to tell Emulab what kind of storage object this is to be. Currently the only options are "SAN" (for remote block storage objects), and "local" (for local node storage). SAN class objects will only map onto shared storage hosts presently. For ephemeral blockstores, you MUST specify the class for your blockstore, else the parser will complain. For persistent blockstores, it is not necessary as SAN is assumed. In the future, we might support local persistent blockstores.

$myblocks set-size "<number>(TB|GB|MB|TiB|GiB|MiB)"

Every storage object has an associated size. For ephemeral blockstores, the "set-size" function is how you set it. All values are converted internally to mebibytes (2^20), which means that the base 10 quantities will be rounded down to the nearest mebibyte. As with other Emulab resources, think carefully about what you really need, and don't ask for more than this (particularly when specifying remote storage). Storage Objects larger than a couple of terabytes are unlikely to map. For local ephemeral blockstores, you may omit the size, and Emulab will use the maximum available space on the given "placement". For persistent blockstores you should NOT specify a size.

$myblocks set-protocol "(iSCSI|SAS)"

If you wish to pin down the bus transport used for your storage object, the "set-protocol" function allows you to do this. Currently only "iSCSI" and "SAS" options are supported, though this list should grow over time. iSCSI is only for class "SAN", and "SAS" is for class "local". In the near-to-medium term, you can omit this since Emulab will pick the only available protocol given the class. Since persistent blockstores currently imply iSCSI, you should either specify that or omit it entirely.

$myblocks set-type <type>

If you happen to know Emulab's underlying Storage Object types, you can set your object to this type directly instead of letting the mapper figure it out for you. Use of this is discouraged unless you have a specific need and know what you are doing. If the type is set, then the class, protocol, and most other attributes should NOT be since they are implied by the type. For remote (SAN) objects, however, you must also specify the size with "set-size".

Binding storage objects

Storage objects can be bound to nodes via a link, lan, or by directly "fixing" them to a node. The former two are for storage objects accessible via a remote transport bus (e.g. iSCSI), while the latter is for local node storage (though it can also be used as a shorthand for associating a storage object with a specific node in your experiment).

set san [$ns make-san "$node1 $node2 $myblocks1 $myblocks2"]

One or more blockstores objects can be put into a SAN network as above. When this is done, Emulab's parser and mapper implicitly create a fictitious virtual machine container to represent each blockstore. You will see these in your experiment node listing (type "blockstore"). You can't SSH into these or use them like you would other nodes. They do, however, have experimental net IP addresses, will answer pings, and listen for iSCSI connections. Really this is just the shared infrastructure storage host listening for your incoming mount requests on a dedicated IP address. Note that the 'san' network is always "best effort". The "make-san" directive is just syntactic sugar for: "make-lan 'host1 host2 disk1 ...' ~ 0ms". Emulab's shared storage infrastructure does not support shaped links/lans at this time; the parser will actively disallow the specification of shaping when remote blockstore objects are in the member list for a link or lan. The OS on all nodes in the san network must support the "rem-bstore" OS feature, or experiment mapping will fail.

$myblocks set-node <some_node>

This directive tells Emulab to bind the blockstore to a particular node. You MUST specify this when creating a local storage object, otherwise Emulab has no idea what to do with the object! The OS on the local node must support the 'loc-bstore' os feature. You can also use this with remote blockstores to bind them to a single node in your experiment; this is just syntactic sugar that creates a link between the remote blockstore and the node behind the scenes in the parser.

$myblocks set-placement "(all|sysvol|nonsysvol)"

Use this directive to specify where Emulab should allocate local storage from (not valid with remote blockstores).

- any: Use any remaining space on the node, including free space on the system volume and any non-system disks. This gives you the maximum space available on a node and is the default placement.

- sysvol: Only allocate from the remaining system (boot) disk space. This is the equivalent of what you would get with the old mkextrafs script. Useful if you wish to later capture the blockstore by making a "whole disk image" of your node.

- nonsysvol: Only allocate from disk space that does not include the system disk. Useful for isolation and maximum performance on systems with more than one extra disk.

Emulab will use volume management mechanisms to aggregate physical devices, partitions, etc. as needed to fulfill your request (lvm on Linux, and zfs or gvinum on FreeBSD).

$myblocks set-lease "pid/dataset-name"

This directive is used with persistent blockstore objects. As described above, these blockstores are named and associated with a project. To use one in an experiment, you must specify the project id (pid) and the name given to the dataset when it was created. For a persistent blockstore, you should bind it to a specific node in the experiment using set-node as shown in the example.

Asking for a filesystem

$myblocks set-mount-point "/some/mount"

For ephemeral blockstores, Emulab will automatically create a filesystem for you when you use this directive. However, you can't ask for a specific filesystem type (yet). Instead, the type will be implied depending on the node OS and blockstore type as follows:

| OS | Blockstore type | FS type created | Notes |

|---|---|---|---|

| Linux | local (sysvol) | ext4, ext3, ext2 | Uses the most recent version of ext supported by the OS. Created on raw disk partition to ensure compatibility with Emulab's imagezip tool. |

| Linux | local (nonsysvol, any) | ext4, ext3, ext2 | Uses most recent version of ext supported. Created on top of LVM2 logical volume manager. |

| Linux | remote | ext4, ext3, ext2 | Uses most recent version of ext supported. |

| FreeBSD | local (sysvol) | ufs2 | Created on raw disk partition to ensure compatibility with Emulab's imagezip tool. |

| FreeBSD (32-bit) | local (nonsysvol, any) | ufs2 | Created on top of gvinum logical volume. Will create a "stripe" volume for nonsysvol when all additional disks are the same size. Creates a "concat" volume otherwise. |

| FreeBSD (64-bit) | local (nonsysvol, any) | zfs | Created on top of a ZFS zpool. A native ZFS filesystem has much higher performance than a ufs2 filesystem on top of a ZFS zvol. To get a ufs2 filesystem with nonsysvol or any placement, create the blockstore without specifying a mount point, create the filesystem by hand with newfs, and add a mount point to /etc/fstab. |

| FreeBSD | remote | ufs2 |

The filesystem will have reasonable defaults, and will span the size of the blockstore. Mount points are valid for local storage, and for remote storage connected to a single node.

For persistent blockstores, the set-mount-point directive will only mount an existing filesystem. Emulab will not automatically create the filesystem inside the blockstore, you should use the -f option of createdataset or create it yourself using these guidelines:

| Desired OS(es) | Desired access | FS type supported | Notes |

|---|---|---|---|

| Linux | Read-write | ext4, ext3, ext2 | Use ext4 for recent Linux versions. Initial creation of ext2 and ext3 filesystems via createdataset can be slow. |

| FreeBSD | Read-write | ufs2, ext3, ext2 | Specify "-f ufs" when using createdataset to get a ufs2 filesystem. |

| Linux | Read-only | ext4, ext3, ext2, ufs2 | Linux can only mount FreeBSD UFS filesystems read-only. You will need to populate the FS under FreeBSD (duh!) |

| FreeBSD | Read-only | ext3, ext2, ufs2 | |

| FreeBSD 10.x | Read-only | ext4, ext3, ext2, ufs2 | Only FreeBSD 10.x and above support some ext4 extensions and then just as read-only mounts. |

If you have created a filesystem on your blockstore, you can have it automatically mounted on subsequent uses by using this directive, subject to certain restrictions:

- No nested mounts (one mount residing down a path rooted at another mount).

- No funky characters in the path components. Emulab allows: [a-z], [A-Z], [0-9] and underscore (_).

- No mounting on top of critical paths. Asking for mounts on top of things like "/sbin", "/", "/etc", etc. is disallowed.

- Only available for local nodes and san "links" (i.e., a lan with two members: one node, and one remote blockstore).

Persistent Storage Commands

Because persistent datasets exist independent of any experiment, there is need for an "out-of-band" API for creating, managing, and deleting them. Currently there is no web interface for performing these operations so you must use the command line interface on users.emulab.net. The available commands are:

- createdataset [-hU] [-t type] [-e expire] [-f fstype] -s size dataset_id

Creates a dataset of the indicated type (optional, default is 'stdataset') and size (required) with the given <pid>/<id> format name. You may also specify an explicit expiration data and initial filesystem. The caller must be an admin or have local_root permission in the project.

The type is either 'stdataset' or 'ltdataset'.

The expire time should be in the format recognized by the Perl str2time function. If no expiration time is specified, it will default to the site-wide default value for this dataset type (which can be queried with "showdataset -D").

If specified, fstype should be one of: 'ext2', 'ext3', 'ext4', or 'ufs'. This option will pre-create a filesystem of the appropriate type on the underlying blockstore. If this option is not specified, then no filesystem will be created and you cannot use the NS file set-mount-point option unless and until a filesystem is explicitly created. If the dataset is intended to be used only under Linux, then 'ext4' is the preferred filesystem type. If using only under FreeBSD, then use 'ufs'. If you intend to use both, then 'ext3' is the best choice.

This command will automatically create the dataset, allocate storage resources, and create a filesystem, if the desired size and expiration time are within the constraints imposed by the project quota and site-wide limits. Otherwise the call will fail, unless the -U option was specified. With -U, the dataset will be left unapproved and an email will be sent to the site administrators who will need to approve or deny the request.

Example:

createdataset -t ltdataset -e 1/1/2015 -f ext4 -s 1000000 testbed/foo

would create a 1TB long-term dataset called "foo" with an ext4 filesystem that will expire at the beginning of 2015.

- extenddataset [-h] dataset_id

Extends the lifetime of the named dataset by the datset-type-specific extension period (available via "showdataset -D"). The caller must be the dataset owner, an admin, or have local_root privileges in the project.

The dataset must currently be in the grace state (as indicated by "showdataset"). Additionally, the dataset type must allow extensions and either 1) the type allows an unlimited number of extensions or, 2) the dataset has not yet have been extended the maximum number of times. If successful, the dataset is returned to the valid state.

- showdataset [-hDa] [-p pid] [-u uid] [ dataset_id ... ]

Show information about one or more datasets. With no options, show information about all datasets accessible by the caller. Output of this command shows the name, owner, type, state, use count, number of extensions, and attributes of each dataset along with the inception, last-used, and expiration dates.

With the -p option, shows all datasets belonging to a project. The caller must be a member of the project or an admin.

With the -u option, shows all datasets belonging to the indicated user. The caller must be an admin.

With a list of datasets, show info about those datasets. The caller must have access to those datasets or be an admin.

Only one of -p, -u, or a list of datasets can be given.

If the caller is an admin, -a can be used to show info about all datasets in the system.

Finally, the -D option describes, in human-digestible terms, the settings of the site-specific variables for each dataset type, ala:

stdataset: Maximum size: 1.00 TiB (1099511627776 bytes). Expiration: after a lease-specific time period (maximum of 7.0 days from creation). Disposition: locked-down after expiration plus 4.0 days grace period. Extensions: allows up to 2 1.0 day extensions during grace period. ltdataset: Maximum size: determined by project quota. Expiration: after 180.0 days idle. Disposition: locked-down after expiration plus 180.0 days grace period. Extensions: none.

Examples:

showdataset -p testbed

would show all datasets associated with the testbed project (assuming the caller has appropriate permissions).

wap showdataset -a

would show info about all datasets in the system.

- deletedataset [-hf] dataset_id

Destroys a dataset. Frees up the storage space associated with the dataset. This is permanent, there is no recovering the data after a dataset has been destroyed!

By default, datasets can only be destroyed if they are in the unapproved or expired states and not currently in use. The -f option can be used to force destruction from any state, but only if not currently in use. Needless to say, this option should be used with care.

Example:

destroydataset -f testbed/foo

destroys the foo dataset even if it is currently valid.

Implementation Issues and Caveats

- The contents of ephemeral storage objects will be lost at swapout time!

Did we mention this already? It is important to remember. Blockstore contents and mounts are safe across node reboots, they aren't that ephemeral! You can, of course, explicitly copy off the contents before swapout, make use of an imaging mechanism, or use a persistent storage object if you want a longer life span.

- No MS Windows support for blockstores.

We have no immediate plans to support blockstores on Windows clients.

- Link emulation / bandwidth reservation unavailable.

Emulab's current remote block storage implementation DOES NOT have any means to guarantee bandwidth, or otherwise setup emulated delays, loss, etc. Traffic from your nodes to the shared storage hosts is best effort and subject to the transient aggregate usage from all concurrent experiments. This requires "~" and "0ms" as bandwidth and delay, respectively, in link and lan definitions that include remote storage objects.

- Topology verification fails.

During the initial phases of experiment creation, you may see the swap-in process complain that topology verification failed. Please ignore this until further notice - it doesn't indicate an actual problem (just a befuddlement of the guy implementing this on how to make the verifier grok the syntactic sugar we have in the main parser for storage objects).

- Not compatible with explicit or implicit uses of mkextrafs.

You will not be able to use mkextrafs if you have specified blockstores in your NS file. this includes its implicit use by Emulab including: link tracing and the disk agent.

- Modifying a swapped-in experiment with blockstores will not work.

- Support for creating a customized image with ephemeral local blockstores is limited and non-intuitive.

In particular, the only configuration that works correctly is one with a single sysvol blockstore and a "whole disk" image. While you can create a custom image from a node with multiple blockstores or nonsysvol blockstores, it will not boot correctly as state related to those blockstores will be included in the image, but not the blockstores themselves. Similarly if you use a sysvol blockstore but only save a standard "partition" image, the mountpoint appears in /etc/fstab, but the extra partition containing the blockstore data will not be included in the image. The (even more) non-intuitive part is that once you have created the custom image, the extra filesystem is baked into the image and the image cannot be used in future experiments in which the NS specification includes a sysvol blockstore! In other words, the recipe would go something like:

- Create and swapin a one-node experiment using an NS file that includes a standard system image and a sysvol blockstore

- Load data into the blockstore on that node and create a custom image

- Create and swapin a new experiment that uses your custom image on one or more nodes and does not include any sysvol blockstore on those nodes

- Support for volume managers or non-standard filesystems on persistent blockstores is currently non-existent.

It can no doubt be done, but it is going to hurt.

- Mounted filesystems are owned by root.

As created by the storage subsystem, the mounted filesystem will only be writable by root. You can use sudo to change the owner to your own user id. You will only need to do this once, the onwership change will persist across node reboots. For example, the following would change the owner to "jmh" and allow anyone in the "testbed" project to write to the filesystem:

sudo chown jmh:testbed /some/mount sudo chmod 775 /some/mount