Vnodes

Multiplexed Virtual Nodes in Emulab

Overview

In order to allow experiments with a very large number of nodes, we provide a multiplexed virtual node implementation. If an experiment application's CPU, memory and network requirements are modest, multiplexed virtual nodes (hereafter known as just "virtual nodes"), allow an experiment to use 10-20 times as many nodes as there are available physical machines in Emulab. These virtual nodes can currently only run on Fedora15 using OpenVZ is container-based virtualization. Please see the Emulab XEN page for information about our XEN based virtual nodes, although much of the information on this page also applies to our XEN virtual nodes.

Virtual nodes fall between simulated nodes and real, dedicated machines in terms of accuracy of modeling the real world. A virtual node is just a virtual machine running on top of a regular operating system. In particular, our virtual nodes are based on either OpenVZ container-based virtualization or on the XEN hypervisor. Both approaches allow groups of processes to be isolated from each other while running on the same physical machine. Emulab virtual nodes provide isolation of the filesystem, process, network, and account namespaces. That is to say, each virtual node has its own private filesystem, process hierarchy, network interfaces and IP addresses, and set of users and groups. This level of virtualization allows unmodified applications to run as though they were on a real machine. Virtual network interfaces are used to form an arbitrary number of virtual network links. These links may be individually shaped and may be multiplexed over physical links or used to connect to virtual nodes within a single physical node.

With some limitations, virtual nodes can act in any role that a normal Emulab node can: end node, router, or traffic generator. You can run startup commands, ssh into them, run as root, use tcpdump or traceroute, modify routing tables, and even reboot them. You can construct arbitrary topologies of links and LANs, even mixing virtual and real nodes.

The number of virtual nodes that can be multiplexed on a single physical node depends on a variety of factors including the type of virtualization (OpenVZ or XEN), the resource requirements of the application, the type of the underlying node, the bandwidths of the links you are emulating and the desired fidelity of the emulation. See the Advanced Issues section for more info.

Use

Multiplexed virtual nodes are specified in an NS description by indicating that you want the pcvm node type:

set nodeA [$ns node] tb-set-hardware $nodeA pcvm

or, if you want all virtual nodes to be mapped to the same machine type::

set nodeA [$ns node] tb-set-hardware $nodeA d710-vm

That's it! With few exceptions, every thing you use in an NS file for an Emulab experiment running on physical nodes, will work with virtual nodes.

As a simple example, we could take the basic NS script used in the tutorial add the following lines:

tb-set-hardware $nodeA pcvm tb-set-hardware $nodeB pcvm tb-set-hardware $nodeC pcvm tb-set-hardware $nodeD pcvm

and change the setting of the OS to OPENVZ-STD:

tb-set-node-os $nodeA OPENVZ-STD tb-set-node-os $nodeB OPENVZ-STD tb-set-node-os $nodeC OPENVZ-STD tb-set-node-os $nodeD OPENVZ-STD

and the resulting NS file can be submitted to

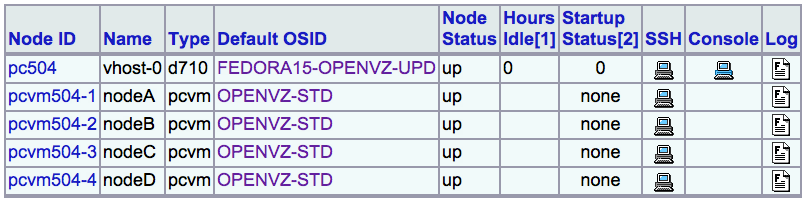

produce a similar topology. Once the experiment has been instantiated,

the experiment web page should include a listing of the reserved nodes that

looks something like:

By looking at the NodeIDs (pcvm504-NN), you can see that all four virtual nodes were assigned to the same physical node (pc504). (At the moment, control over virtual node to physical node mapping is limited. The Advanced Issues section discusses ways in which you can affect the mapping.) Clicking on the ssh icon will log you in to the virtual node. Virtual nodes do not have consoles, so there is no corresponding icon. Note that there is also an entry for the hosting physical node. You can login to it as well, either with ssh or via the console. See the Advanced Issues section for how you can use the physical host. Finally, note that there is no delay node associated with the shaped link. This is because virtual links always use end node shaping.

Logging into a virtual node you see only the processes associated with your node:

PID TT STAT TIME COMMAND

1883 ?? SsJ 0:00.03 /usr/sbin/syslogd -ss

1890 ?? SsJ 0:00.01 /usr/sbin/cron

1892 ?? SsJ 0:00.28 /usr/sbin/sshd

1903 ?? IJ 0:00.01 /usr/bin/perl -w /usr/local/etc/emulab/watchdog start

5386 ?? SJ 0:00.04 sshd: mike@ttyp1 (sshd)

5387 p1 SsJ 0:00.06 -tcsh (tcsh)

5401 p1 R+J 0:00.00 ps ax

Standard processes include syslog, cron, and sshd along with the Emulab watchdog process. Note that the process IDs inside OpenVZ containers are in fact not virtualized, they are in the physical machine's name space. However, a virtual node still cannot kill a process that is part of another container.

Doing a df you see:

Filesystem 1K-blocks Used Avail Capacity Mounted on

/dev/simfs 507999 1484 496356 0% /

ops:/proj/testbed 14081094 7657502 5297105 59% /proj/testbed

ops:/users/mike 14081094 7657502 5297105 59% /users/mike

...

You will notice a private root filesystem, as well as the usual assortment of remote filesystems that all experiments in Emulab receive. Thus you have considerable flexibility in sharing ranging from shared by all nodes (/users/yourname and /proj/projname), shared by all virtual nodes on a physical node (/local/projname) to private to a virtual node (/local).

Doing ifconfig reveals:

3: eth999: <BROADCAST,MULTICAST,UP,LOWER_UP>

mtu 1500 qdisc noqueue state UNKNOWN

link/ether 02:6f:ac:87:16:52 brd ff:ff:ff:ff:ff:ff

inet 172.19.104.1/12 brd 172.31.255.255 scope global eth999

inet6 fe80::6f:acff:fe87:1652/64 scope link

valid_lft forever preferred_lft forever

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

14: mv1.1@if12: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN>

mtu 1500 qdisc htb state UNKNOWN qlen 50

link/ether 02:0d:3c:ac:3c:fc brd ff:ff:ff:ff:ff:ff

inet 10.1.1.2/24 brd 10.1.1.255 scope global mv1.1

inet6 fe80::d:3cff:feac:3cfc/64 scope link

valid_lft forever preferred_lft forever

Here eth999 is the control net interface. Due to limited routable IP address space, Emulab uses the 172.16/12 unroutable address range to assign control net addresses to virtual nodes. These addresses are routed within Emulab, but are not exposed externally. This means that you can access this node (including using the DNS name nodeC.vtest.testbed.emulab.net) from ops.emulab.net or from other nodes in your experiment, but not from outside Emulab. If you need to access a virtual node from outside Emulab, you will have to proxy the access via ops or a physical node (that is what the ssh icon in the web page does). mv1.1 is a virtual ethernet device and is the experimental interface for this node. There will be one device for every experimental interface.

Advanced Issues

Taking advantage of a virtual node host.

A physical node hosting one or more virtual nodes is not itself part of the topology, it exists only to host virtual nodes. However, the physical node is still setup with user accounts and shared filesystems just as a regular node is. Thus you can login to, and use the physical node in a variety of ways. Note that we do not advise that you do this, but as with many other aspects of Emulab, we give you all the rope you want, what you do with it is your business!

- The private root filesystem for each virtual node is accessible to the host node. Thus the host can monitor log files and even change files on the fly.

- Other forms of monitoring can be done as well since all processes, filesystems, network interfaces and routing tables are visible in the host. For instance, you can run tcpdump on a virtual interface outside the node rather than inside it. You can also tcpdump on a physical interface on which many virtual nodes' traffic is multiplexed.

We should emphasize however, that virtual nodes are not "performance isolated" from each other or from the host; i.e., a big CPU hogging monitor application in the host might affect the performance and behavior of the hosted virtual nodes.

Creating a custom image

Emulab allows you to create a Custom OS Image of a virtual node, much like you are able to create a custom image to run on a physical node. Your custom image is saved and loaded in much the same way as described for physical nodes in the tutorial.

The difference is in how you create the image after you have setup your virtual node the way you want it. Once you are ready, go to the Show Experiment page for your experiment, and click on the node you want to save. One of the menu options is "Create a Disk Image". Click on that link and follow the instructions. If you are customizing one of the Emulab provided images for the first time, you will need to complete the form and click submit. If you updating your own image, then you just need to click on the confirmation button.

Controlling virtual node layout.

Normally, the Emulab resource mapper, assign will map virtual nodes onto physical nodes in such a way as to achieve the best overall use of physical resources without violating any of the constraints of the virtual nodes or links. In a nutshell, it packs as many virtual nodes onto a physical node as it can without exceeding a node's internal or external network bandwidth capabilities and without exceeding a node-type specific static packing factor. Internal network bandwidth is an empirically derived value for how much network data can be moved through internally connected virtual ethernet interfaces. External network bandwidth is determined by the number of physical interfaces available on the node. The static packing factor is intended as a coarse metric of CPU and memory load that a physical node can support, currently it is based strictly on the amount of physical memory in each node type. The current values for these constraints are:

- Internal network bandwidth: 400Mb/sec for all node types

- External network bandwidth: 400Mb/sec (4 x 100Mb NICs) for all node types

- Packing factor: 10 for pc600s and pc1500s, 20 for pc850s and pc2000s

The mapper generally produces an "unsurprising" mapping of virtual nodes to physical nodes (e.g., mapping small LANs all on the same physical host) and where it doesn't, it is usually because doing so would violate one of the constraints. One exception involves LANs.

One might think that an entire 100Mb LAN, regardless of the number of members, could be located on a single physical host since the internal bandwidth of a host is 400Mb/sec. Alas, this is not the case. A LAN is modeled in Emulab as a set of point-to-point links to a "LAN node." The LAN node will then see 100Mb/sec from every LAN member. For the purposes of bandwidth allocation, a LAN node must be mapped to a physical host just as any other node. The difference is that a LAN node may be mapped to a switch, which has "unlimited" internal bandwidth, as well as to a node. Now consider the case of a 100Mb/sec LAN with 5 members. If the LAN node is colocated with the other nodes on the same physical host, it is a violation as 500Mb/sec of bandwidth is required for the LAN node. If instead the LAN node is mapped to a switch, it is still a violation because now we need 500Mb/sec from the physical node to the switch, but there is only 400Mb/sec available there as well. Thus you can only have 4 members of a 100Mb/sec LAN on any single physical host. You can however have 4 members on each of many physical hosts to form a large LAN, in this case the LAN node will be located on the switch. Note that this discussion applies equally to 8 members on a 50Mb/sec LAN, 20 members of a 20Mb LAN, or any LAN where the aggregate bandwidth exceeds 400Mb/sec. And of course, you must take into consideration the bandwidth of all other links and LANs on a node. Now you know why we have a complex program to do this!

Anyway, if you are still not deterred and feel you can do a better job of virtual to physical node mapping yourself, there are a few ways to do this. Note carefully though that none of these will allow you to violate the bandwidth and packing constraints listed above.

The NS-extension tb-set-colocate-factor command allows you to globally decrease (not increase!) the maximum number of virtual nodes per physical node. This command is useful if you know the application load you are running in the vnodes is going to require more resources per instance (e.g., a java DHT), and that the Emulab picked values of 10-20 per physical node are just too high. Note that currently, this is not really a "factor," it is an absolute value. Setting it to 5 will reduce the capacity of all node types to 5, whether they were 10 or 20 by default.

If the packing factor is ok, but assign just won't colocate virtual nodes the way you want, you can resort to trying to do the mapping by hand using tb-fix-node. This technique is not for the faint of heart (or weak of stomach) as it involves mapping virtual nodes to specific physical nodes, which you must determine in advance are available. For example, the following code snippet will allocate 8 nodes in a LAN and force them all onto the same physical host (pc41):

set phost pc41 # physical node to use

set phosttype 850 # type of physical node, e.g. pc850

# Force virtual nodes in a LAN to one physical host

set lanstr ""

for {set j 1} {$j <= 8} {incr j} {

set n($j) [$ns node]

append lanstr "$n($j) "

tb-set-hardware $n($j) pcvm${phosttype}

tb-fix-node $n($j) $phost

}

set lan [$ns make-lan "$lanstr" 10Mb 0ms]

If the host is not available, this will fail. Note again, that "fixing" nodes will still not allow you to violate any of the fundamental mapping constraints.

There is one final technique that will allow you to circumvent assign and the bandwidth constraints above. The NS-extension tb-set-noshaping can be used to turn off link shaping for a specific link or LAN, e.g.:

tb-set-noshaping $lan 1

added to the NS snippet above would allow you to specify "1Mb" for the LAN bandwidth and map 20 virtual nodes to the same physical host, but then not be bound by the bandwidth constraint later. In this way assign would map your topology, but no enforcement would be done at runtime. Specifically, this tells Emulab not to set up ipfw rules and dummynet pipes on the specified interfaces. One semi-legitimate use of this command, is in the case where you know that your applications will not exceed a certain bandwidth, and you don't want to incur the ipfw/dummynet overhead associated with explicitly enforcing the limits. Note, that as implied by the name, this turns off all shaping of a link, not just the bandwidth constraint. So if you need delays or packet loss, don't use this.

How do I know what the right colocate factor is?

The hardest issue when using virtual nodes is determining how many virtual nodes you can colocate on a physical node, without affecting the fidelity of the experiment. Ultimately, the experimenter must make this decision, based on the nature of the applications run and what exactly is being measured. We provide some simple limits (e.g., network bandwidth caps) and coarse-grained aggregate limits (e.g., the default colocation factor) but these are hardly adequate.

One thing to try is to allocate a modest sized version of your experiment, say 40-50 nodes, using just physical nodes and compare that to the same experiment with 40-50 virtual nodes with various packing factors.

We are currently working on techniques that will allow you to specify some performance constraints in some fashion, and have the experiment run and self-adjust til it reaches a packing factor that doesn't violate those constraints.

Mixing virtual and physical nodes.

It is possible to mix virtual nodes and physical nodes in the same experiment. For example, we could setup a LAN, similar to the above example, such that half the nodes were virtual (pcvm) and half physical (pc):

set lanstr ""

for {set j 1} {$j <= 8} {incr j} {

set n($j) [$ns node]

append lanstr "$n($j) "

if {$j & 1} {

tb-set-hardware $n($j) pcvm

} else {

tb-set-hardware $n($j) pc

tb-set-node-os $n($j) FBSD-STD

}

}

set lan [$ns make-lan "$lanstr" 10Mb 0ms]

We have also implemented, a non-encapsulating version of the virtual ethernet interface that allows virtual nodes to talk directly to physical ethernet interfaces and thus remove the reduced-MTU restriction. To use the non-encapsulating version, put:

tb-set-encapsulate 0

in your NS file.

Performance

We've done some basic performance characterization of our Linux virtual node implementations, described in an excerpt from a report we wrote while re-examining Emulab's scalability and extensibility to support new technologies. These results may aid in determining how many virtual nodes you can safely pack onto a single physical node by providing some intuition of resource overheads of virtual nodes. However, your experiment will likely stress the implementation differently than our tests---so be cautious!

You can find the report at attachment:report-0.6-vnode-scaling.pdf.

Limitations

Following are the primary limitations of the Emulab virtual node implementation.

- Not a complete virtualization of a node. We make no claims about being a true x86 or even Linux virtual machine. We build on an existing mechanisms with the primary goal of providing functional transparency to applications. We are even more lax in that we assume that all virtual nodes on a physical host belong to the same experiment. This reduces the security concerns considerably. For example, if a virtual node is able to crash the physical machine or is able to see data outside its scope, it only affects the particular experiment. This is not to say that we are egregious in our violation. A particular example is that virtual nodes are allowed to read /dev/mem. This made it much easier as we did not have to either virtualize /dev/mem or rewrite lots of system utilities that use it. The consequence is, that virtual nodes can spy on each other if they want. But then, if you cannot trust yourself, who can you trust!

- Not a complete virtualization of the network. This is another aspect of the previous bullet, but bears special note. While we have virtual interfaces and routing tables, much of the network stack of a physical host remains shared, in particular all the resources used by the higher level protocols. For example, all of the statistics reported by "netstat -s" are global to the node.

- No resource guarantees for CPU and memory on nodes. We also don't provide complete performance isolation. We currently have no virtual node aware CPU scheduling mechanisms. Processes in virtual nodes are just processes on the real machine There are also no limits on virtual or physical memory consumption by a virtual node.

- Nodes must run a specific version of Fedora.

- Will only scale to low 1000s of nodes. We currently have a number of scaling issues that make it impractical to run experiments of more than 1000-2000 nodes. These range from algorithmic issues in the resource mapper and route calculator, to physical issues like too few and too feeble of physical nodes, to user interface issues like how to present a listing or visualization of thousands of nodes in a useful way.

- Virtual nodes are not externally visible. Due to a lack of routable IP space, virtual nodes are given non-routable control net addresses and thus cannot be accessed directly from outside Emulab. You must use a suitable proxy or access them from the Emulab user-login server.

- Virtual ethernet encapsulation reduces the MTU. This is a detail,

but of possible importance to people since they are doing network

experiments. By default, the veth device reduces the MTU by 16 bytes to

- As mentioned, we have a version of the interface which does not use encapsulation.

- Only 400Mb of internal "network" bandwidth. This falls in the rinky-dink node category. As most of our nodes are based on ancient 100Mhz FSB, sub-GHz technology, they cannot host many virtual nodes or high capacity virtual links. The next wave of cluster machines will be much better in this regard.

- No node consoles. Virtual nodes do not have a virtual console. If we discover a need for one, we will implement it.

- Must use "linkdelays." To enable topology-on-a-single-node configurations and to conserve physical resources in the face of large topologies, we use on-node traffic shaping rather than dedicated traffic shaping nodes. This increases the overhead on the host machine slightly.

Known Bugs

Many!

Technical Details

Under Construction!