Nse

Hybrid Experiments with Simulation and Emulation

THIS PAGE IS OBSOLETE; NSE SIMULATION IS NO LONGER SUPPORTED

Overview

Emulab has integrated network simulation using NS Emulation (NSE) enabling an experimenter to combine simulation and real hardware emulation. The advantages of such an integrated experiment are:

- Validation of experimental simulation models against real traffic

- Exposing experimental real traffic to congestion-reactive cross traffic derived from a rich variety of existing, validated simulation models

- Scaling to larger topologies by multiplexing simulated elements on physical resources than would be possible with just real elements.

The primary advantage of using Emulab to perform such experiments is the complete automation that we have built when we integrated NSE. Here is a list of issues that we have addressed since our first version of Emulab NSE integration:

- Support for multiple distributed NSE instances in the same experiment in order to scale to larger topologies.

- Support for an NS duplex-link between simulated nodes on different physical hosts (i.e. NSE instances)

- Encapsulate/Decapsulate simulator packets to support arbitrary traffic agents to talk to each other across physical hosts (i.e. NSE instances)

- Use IP address based routes for global routing of packets

- Use multiple routing table support that we added in FreeBSD to ensure correct routing of live packets injected into the network. This was a problem with NSE in certain configurations on a multi-homed PC host

- Use Virtual Ethernet Device that we added in FreeBSD in order to multiplex virtual links on physical links

- NSE is integrated with Emulab's event system to deliver events in $ns at statements. This is necessary since NSE is distributed and events need to be delivered to different instances. A central event scheduler is responsible for the delivery of such events at the right time.

Some of the issues addressed were common with multiplexed virtual nodes. Thus our solutions such as multiple-routing-table and virtual-ethernet-device support are used in both simulation and virtual-node subsystems. This enables large scale integrated experiments that includes PC nodes, virtual nodes and simulated resources all in the same experiment. Note that simulated resources such as nodes are abstractions that do not have nearly the same level of virtualization as real PCs or virtual nodes. There is no equivalent of logging in, running unmodified applications, etc. on a simulated node.

The number of simulated nodes that are multiplexed on each PC depends mainly on how much traffic passes through a node. Since this is difficult to estimate statically, we initially use a simple co-location factor to optimistically map the simulated nodes. The initial mapping if it causes an overload (i.e. one or more NSE instances falls behind real-time), will cause the system to re-map more conservatively. Read the technical details section for the gory details.

An experimenter can also guide initial mapping by specifying the maximum co-location factor. The number of simulation objects that can be supported without falling behind real time depends on the amount of external traffic and the number of internal simulation events that need to be processed. Please read nse scaling and nse accuracy and capacity to get some idea on what values to specify for co-location factors.

The output from the simulation including errors such as the ones that report inability to keep up with real time are logged into a file /proj/<project_name>/exp/<experiment_name>/logs/nse-simhost-0...n.log

Use

To create an experiment with simulated resources in it, a user simply has to enclose a block of NS Tcl code in $ns make-simulated { }. You specify connections between simulated and physical nodes as usual. Multiple make-simulated blocks are allowed in a single experiment which results in the concatenation of all such blocks.

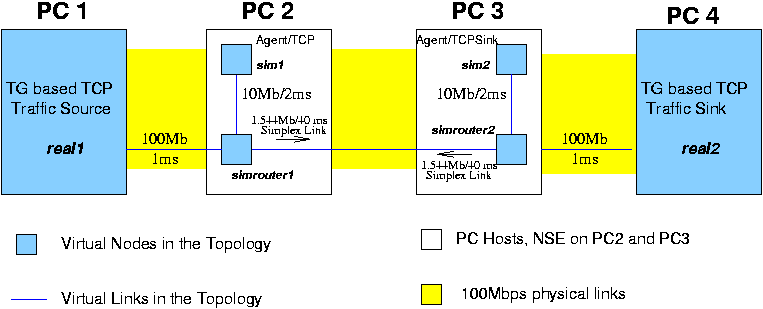

Figure 1. A hybrid experiment with simulation and emulation

The following code gives an example of an experiment with a dumbell topology comprised of both real PC nodes and simulated nodes. This is illustrated in Figure 1.

source tb_compat.tcl

set ns [new Simulator]

# Enable automatic static routing

$ns rtproto Static

set real1 [$ns node]

set real2 [$ns node]

# Emulab folks all like FreeBSD more!

tb-set-node-os $real1 FBSD-STD

tb-set-node-os $real2 FBSD-STD

$ns make-simulated {

# All the code here run in the simulation

set sim1 [$ns node]

set sim2 [$ns node]

set simrouter1 [$ns node]

set simrouter2 [$ns node]

# Bottleneck link inside simulation. Simulated

# and real traffic share this link and interfere

# with each other

$ns duplex-link $simrouter1 $simrouter2 1.544Mb 40ms DropTail

# More duplex links inside the simulation

$ns duplex-link $sim1 $simrouter1 10Mb 2ms DropTail

$ns duplex-link $sim2 $simrouter2 10Mb 2ms DropTail

# TCP agent in simulation on node sim1

set tcp1 [new Agent/TCP]

$ns attach-agent $sim1 $tcp1

# FTP application object in simulation on node sim1

set ftp1 [new Application/FTP]

$ftp1 attach-agent $tcp1

# TCPSink object in simulation on node sim2

set tcpsink1 [new Agent/TCPSink]

$ns attach-agent $sim2 $tcpsink1

# Do a connect to tell the system that

# $tcp1 and $tcpsink1 agents will talk to each other

$ns connect $tcp1 $tcpsink1

# Starting at time 1.0 send 75MB of data

$ns at 1.0 "$ftp1 send 75000000"

}

# connecting real and simulated nodes.

$ns duplex-link $real1 $simrouter1 100Mb 2ms DropTail

$ns duplex-link $real2 $simrouter2 100Mb 2ms DropTail

# A real TCP traffic agent on PC real1

set tcpreal1 [new Agent/TCP]

$ns attach-agent $real1 $tcpreal1

set cbr0 [new Application/Traffic/CBR]

$cbr0 attach-agent $tcpreal1

# A real TCP sink traffic agent on PC real2

set tcprealsink1 [new Agent/TCPSink]

$ns attach-agent $real2 $tcprealsink1

# Tell the system that tcpreal1 will talk to

# tcprealsink1

$ns connect $tcpreal1 $tcprealsink1

# Start traffic generator at time 10.0

$ns at 10.0 "$cbr0 start"

# Drastically reduce colocation factor for simulated nodes

# to demonstrate distributed NSE. With this, the 4 simulated

# nodes will be mapped to 2 PCs. Simulator packets from sim1

# to sim2 will be encapsulated and transported over a physical

# link

tb-set-colocate-factor 2

$ns run

The above dumbell topology of 6 nodes will be mapped to 4 PCs in emulab. Note that this is a very low multiplexing factor chosen to keep the example simple. Two simulation host PCs are automatically allocated by the system. The code in the make-simulated block will be automatically re-parsed into two Tcl sub-specifications, of which each is fed into an instance of NSE running on the simulation host. Depending on how the mapping happens, there can either be one or two simulated links that cross PCs. In Figure 1, we have one such link that cross PCs. Simulator packet flows that cross such links are automatically compressed, encapsulated in an IP packet and shipped over the physical link.

nse support is still under further development. Please let us know if you face problems in this system. Here are some caveats:

- Enabling NS tracing causes huge file I/O overhead resulting in nse not keeping up with real time. Therefore, do not enable tracing.

- There is no support for NS's dynamic routing. Only static routing is supported

- The support for automatic parsing of simulation specifications to generate sub-specifications of Tcl code is still in beta. We will enhance it as the usage requires it

Advanced Topics

Scaling

The scaling, accuracy and capacity data reported here are from our OSDI publication

An instance of nse simulated 2Mb constant bit rate UDP flows between pairs of nodes on 2Mb links with 50ms latencies. To measure nse's ability to keep pace with real time, and thus with live traffic, a similar link was instantiated inside the same nse simulation, to forward live TCP traffic between two physical Emulab nodes, again at a rate of 2Mb. On an 850MHz PC, we were able to scale the number of simulated flows up to 150 simulated links and 300 simulated nodes, while maintaining the full throughput of the live TCP connection. With additional simulated links, the throughput dropped precipitously. We also measured nse's TCP model on the simulated links: the performance dropped after 80 simulated links due to a higher event rate from the acknowledgment traffic in the return path.

Accuracy and Capacity

As a capacity test, we generated streams of UDP round-trip traffic between two nodes, with an interposed 850 Mhz PC running nse on a FreeBSD 4.5 1000HZ kernel. A maximum stable packet rate of 4000 packets per second was determined over a range of packet rates and link delays using 64-byte and 1518-byte packets. Since these are round trip measurements, the packet rates are actually twice the numbers reported. With this capacity, we performed experiments to measure the delay, bandwidth and loss rates for representative values. The results are summarized in Tables 1, 2 and 3

Emulab's integration of nse is much less mature than its support for dummynet based emulation. This is reflected in the large percentage error values in bandwidth and loss rates. Integrating nse has already uncovered a number of problems that have since been solved; as we continue to gain experience with nse, we expect the situation to improve.

+--------+-------------+--------------------------+-----------------+ | delay | packet size | observed | adjusted | | | +----------+--------+------+----------+------+ | (ms) | (bytes) | RTT (ms) | stddev | %err | RTT (ms) | %err | +========+=============+==========+========+======+==========+======+ | 0 | 64 | 0.238 | 0.004 | N/A | N/A | N/A | | +-------------+----------+--------+------+----------+------+ | | 1518 | 1.544 | 0.025 | N/A | N/A | N/A | +--------+-------------+----------+--------+------+----------+------+ | 5 | 64 | 10.251 | 0.295 | 2.51 | 10.013 | 0.13 | | +-------------+----------+--------+------+----------+------+ | | 1518 | 11.586 | 0.067 |15.86 | 10.032 | 0.32 | +--------+-------------+----------+--------+------+----------+------+ | 10 | 64 | 20.255 | 0.014 | 1.28 | 20.017 | 0.09 | | +-------------+----------+--------+------+----------+------+ | | 1518 | 21.675 | 0.093 | 8.38 | 20.121 | 0.61 | +--------+-------------+----------+--------+------+----------+------+ | 50 | 64 | 100.474 | 0.029 | 0.47 | 100.236 | 0.24 | | +-------------+----------+--------+------+----------+------+ | | 1518 | 102.394 | 3.440 | 2.39 | 100.840 | 0.84 | +--------+-------------+----------+--------+------+----------+------+ | 300 | 64 | 601.690 | 0.546 | 0.28 | 601.452 | 0.24 | | +-------------+----------+--------+------+----------+------+ | | 1518 | 602.999 | 0.093 | 0.49 | 601.445 | 0.24 | +--------+-------------+----------+--------+------+----------+------+

Table 1: Accuracy of nse delay at maximum packet rate (4000 PPS) as a function of packet size and link delay. The 0ms measurement represents the base overhead of the link. Adjusted RTT is the observed value minus the base overhead.

Technical Details

TODO